참고

이 페이지는 docs/tutorials/08_quantum_kernel_trainer.ipynb 에서 생성되었다.

기계 학습 응용프로그램(Application) 을 위한 양자 커널 학습#

In this tutorial, we will train a quantum kernel on a labeled dataset for a machine learning application. To illustrate the basic steps, we will use Quantum Kernel Alignment (QKA) for a binary classification task. QKA is a technique that iteratively adapts a parametrized quantum kernel to a dataset while converging to the maximum SVM margin. More information about QKA can be found in the preprint, “Covariant quantum kernels for data with group structure.”

양자 커널 학습의 시작점은 QuantumKernelTrainer 클래스이다. 기본 단계들은 다음과 같다:

데이터셋 준비

양자 특징 맵(feature map) 정의

Set up an instance of

TrainableKernelandQuantumKernelTrainerobjectsQuantumKernelTrainer.fit메소드를 활용하여 주어진 데이터셋에서 커널 매개변수들을 학습학습된 양자 커널을 기계학습 모델로 전달

로컬, 외부 및 Qiskit 패키지 가져오기 & 최적화를 위한 콜백(callback) 클래스 정의#

[1]:

# External imports

from pylab import cm

from sklearn import metrics

import numpy as np

import matplotlib

import matplotlib.pyplot as plt

# Qiskit imports

from qiskit import QuantumCircuit

from qiskit.circuit import ParameterVector

from qiskit.visualization import circuit_drawer

from qiskit.circuit.library import ZZFeatureMap

from qiskit_algorithms.optimizers import SPSA

from qiskit_machine_learning.kernels import TrainableFidelityQuantumKernel

from qiskit_machine_learning.kernels.algorithms import QuantumKernelTrainer

from qiskit_machine_learning.algorithms import QSVC

from qiskit_machine_learning.datasets import ad_hoc_data

class QKTCallback:

"""Callback wrapper class."""

def __init__(self) -> None:

self._data = [[] for i in range(5)]

def callback(self, x0, x1=None, x2=None, x3=None, x4=None):

"""

Args:

x0: number of function evaluations

x1: the parameters

x2: the function value

x3: the stepsize

x4: whether the step was accepted

"""

self._data[0].append(x0)

self._data[1].append(x1)

self._data[2].append(x2)

self._data[3].append(x3)

self._data[4].append(x4)

def get_callback_data(self):

return self._data

def clear_callback_data(self):

self._data = [[] for i in range(5)]

데이터셋 준비#

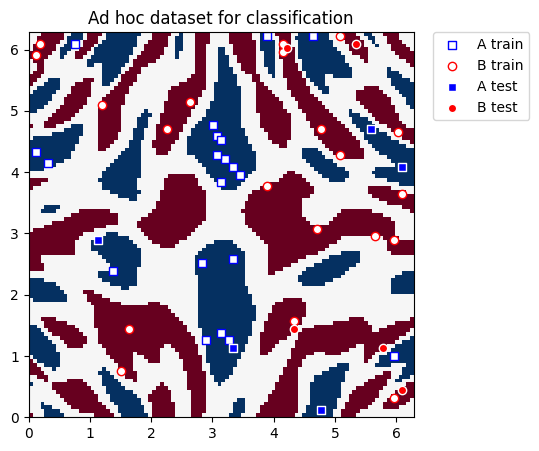

In this guide, we will use Qiskit Machine Learning’s ad_hoc.py dataset to demonstrate the kernel training process. See the documentation here.

[2]:

adhoc_dimension = 2

X_train, y_train, X_test, y_test, adhoc_total = ad_hoc_data(

training_size=20,

test_size=5,

n=adhoc_dimension,

gap=0.3,

plot_data=False,

one_hot=False,

include_sample_total=True,

)

plt.figure(figsize=(5, 5))

plt.ylim(0, 2 * np.pi)

plt.xlim(0, 2 * np.pi)

plt.imshow(

np.asmatrix(adhoc_total).T,

interpolation="nearest",

origin="lower",

cmap="RdBu",

extent=[0, 2 * np.pi, 0, 2 * np.pi],

)

plt.scatter(

X_train[np.where(y_train[:] == 0), 0],

X_train[np.where(y_train[:] == 0), 1],

marker="s",

facecolors="w",

edgecolors="b",

label="A train",

)

plt.scatter(

X_train[np.where(y_train[:] == 1), 0],

X_train[np.where(y_train[:] == 1), 1],

marker="o",

facecolors="w",

edgecolors="r",

label="B train",

)

plt.scatter(

X_test[np.where(y_test[:] == 0), 0],

X_test[np.where(y_test[:] == 0), 1],

marker="s",

facecolors="b",

edgecolors="w",

label="A test",

)

plt.scatter(

X_test[np.where(y_test[:] == 1), 0],

X_test[np.where(y_test[:] == 1), 1],

marker="o",

facecolors="r",

edgecolors="w",

label="B test",

)

plt.legend(bbox_to_anchor=(1.05, 1), loc="upper left", borderaxespad=0.0)

plt.title("Ad hoc dataset for classification")

plt.show()

양자 특징 맵(Quantum Feature Map) 정의#

다음으로, 고전적인 데이터를 양자 상태 공간으로 인코딩하는 양자 특징 맵을 설정한다. 훈련 가능한 회전 레이어를 설정하기 위해 QuantumCircuit 을 사용하고 입력 데이터를 표현하기 위해 Qiskit 에서 ZZFeatureMap 을 사용한다.

[3]:

# Create a rotational layer to train. We will rotate each qubit the same amount.

training_params = ParameterVector("θ", 1)

fm0 = QuantumCircuit(2)

fm0.ry(training_params[0], 0)

fm0.ry(training_params[0], 1)

# Use ZZFeatureMap to represent input data

fm1 = ZZFeatureMap(2)

# Create the feature map, composed of our two circuits

fm = fm0.compose(fm1)

print(circuit_drawer(fm))

print(f"Trainable parameters: {training_params}")

┌──────────┐┌──────────────────────────┐

q_0: ┤ Ry(θ[0]) ├┤0 ├

├──────────┤│ ZZFeatureMap(x[0],x[1]) │

q_1: ┤ Ry(θ[0]) ├┤1 ├

└──────────┘└──────────────────────────┘

Trainable parameters: θ, ['θ[0]']

양자 커널 및 Quantum 커널 Trainer 설정#

To train the quantum kernel, we will use an instance of TrainableFidelityQuantumKernel (holds the feature map and its parameters) and QuantumKernelTrainer (manages the training process).

커널 손실 함수인 SVCLoss 를 QuantumKernelTrainer 의 입력으로 선택하여 양자 커널 정렬(Alignment) 을 사용하여 훈련한다. 이는 Qiskit이 제공하는 손실값이므로 svc_loss 문자열을 사용할 수 있으며 손실값을 문자열로 전달할 때는 기본 설정이 사용됨에 유의한다. 사용자 지정 설정의 경우 원하는 옵션을 사용하여 명시적으로 인스턴스화하고 KernelLoss 객체를 QuantumKernelTrainer 에 전달한다.

옵티마이저로 SPSA를 선택하고 initial_point 인자를 사용하여 학습할 수 있는 매개변수를 초기화한다. 참고: initial_point 인자로 전달된 목록의 길이는 feature map에 있는 학습 가능한 매개변수의 수와 같아야 한다.

[4]:

# Instantiate quantum kernel

quant_kernel = TrainableFidelityQuantumKernel(feature_map=fm, training_parameters=training_params)

# Set up the optimizer

cb_qkt = QKTCallback()

spsa_opt = SPSA(maxiter=10, callback=cb_qkt.callback, learning_rate=0.05, perturbation=0.05)

# Instantiate a quantum kernel trainer.

qkt = QuantumKernelTrainer(

quantum_kernel=quant_kernel, loss="svc_loss", optimizer=spsa_opt, initial_point=[np.pi / 2]

)

양자 커널 학습#

양자 커널을 학습하기 위해 데이터 세트 (샘플과 레이블) 에 QuantumKernelTrainer 의 fit 함수를 호출한다.

The output of QuantumKernelTrainer.fit is a QuantumKernelTrainerResult object. The results object contains the following class fields:

optimal_parameters: A dictionary containing {parameter: optimal value} pairsoptimal_point: The optimal parameter value found in trainingoptimal_value: The value of the loss function at the optimal pointoptimizer_evals: The number of evaluations performed by the optimizeroptimizer_time: The amount of time taken to perform optimizationquantum_kernel: ATrainableKernelobject with optimal values bound to the feature map

[5]:

# Train the kernel using QKT directly

qka_results = qkt.fit(X_train, y_train)

optimized_kernel = qka_results.quantum_kernel

print(qka_results)

{ 'optimal_circuit': None,

'optimal_parameters': {ParameterVectorElement(θ[0]): 2.4745458584261386},

'optimal_point': array([2.47454586]),

'optimal_value': 7.399057680986741,

'optimizer_evals': 30,

'optimizer_result': None,

'optimizer_time': None,

'quantum_kernel': <qiskit_machine_learning.kernels.trainable_fidelity_quantum_kernel.TrainableFidelityQuantumKernel object at 0x7f84c120feb0>}

모델 적합(fit) 및 모델 테스트#

We can pass the trained quantum kernel to a machine learning model, then fit the model and test on new data. Here, we will use Qiskit Machine Learning’s QSVC for classification.

[6]:

# Use QSVC for classification

qsvc = QSVC(quantum_kernel=optimized_kernel)

# Fit the QSVC

qsvc.fit(X_train, y_train)

# Predict the labels

labels_test = qsvc.predict(X_test)

# Evalaute the test accuracy

accuracy_test = metrics.balanced_accuracy_score(y_true=y_test, y_pred=labels_test)

print(f"accuracy test: {accuracy_test}")

accuracy test: 0.9

커널 학습 과정 시각화#

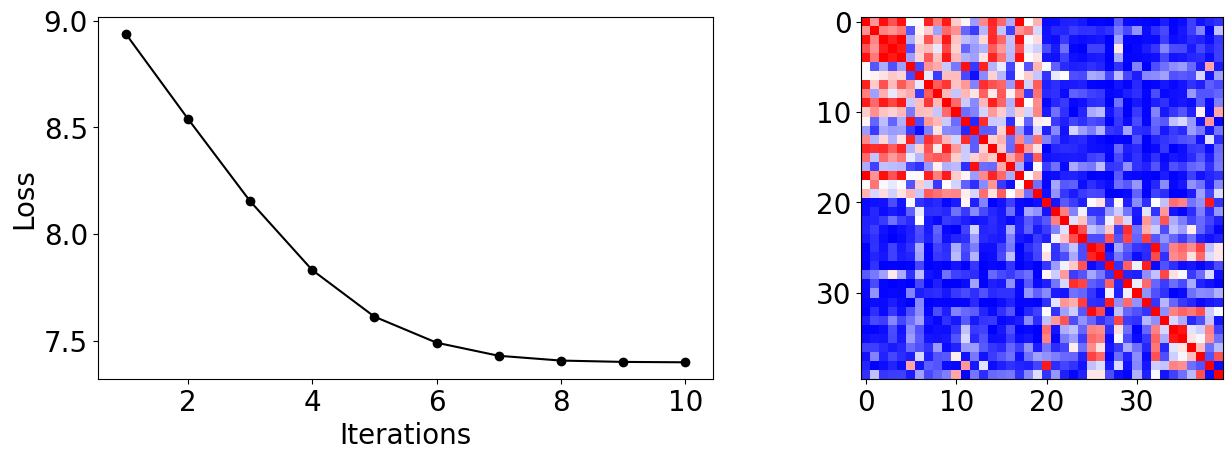

From the callback data, we can plot how the loss evolves during the training process. We see it converges rapidly and reaches high test accuracy on this dataset with our choice of inputs.

또한 학습 샘플들 간의 유사성 측도인 최종 커널 행렬을 표시할 수 있다.

[7]:

plot_data = cb_qkt.get_callback_data() # callback data

K = optimized_kernel.evaluate(X_train) # kernel matrix evaluated on the training samples

plt.rcParams["font.size"] = 20

fig, ax = plt.subplots(1, 2, figsize=(14, 5))

ax[0].plot([i + 1 for i in range(len(plot_data[0]))], np.array(plot_data[2]), c="k", marker="o")

ax[0].set_xlabel("Iterations")

ax[0].set_ylabel("Loss")

ax[1].imshow(K, cmap=matplotlib.colormaps["bwr"])

fig.tight_layout()

plt.show()

[8]:

import qiskit.tools.jupyter

%qiskit_version_table

%qiskit_copyright

Version Information

| Qiskit Software | Version |

|---|---|

qiskit-terra | 0.25.0 |

qiskit-aer | 0.13.0 |

qiskit-machine-learning | 0.7.0 |

| System information | |

| Python version | 3.8.13 |

| Python compiler | Clang 12.0.0 |

| Python build | default, Oct 19 2022 17:54:22 |

| OS | Darwin |

| CPUs | 10 |

| Memory (Gb) | 64.0 |

| Mon May 29 12:50:08 2023 IST | |

This code is a part of Qiskit

© Copyright IBM 2017, 2023.

This code is licensed under the Apache License, Version 2.0. You may

obtain a copy of this license in the LICENSE.txt file in the root directory

of this source tree or at http://www.apache.org/licenses/LICENSE-2.0.

Any modifications or derivative works of this code must retain this

copyright notice, and modified files need to carry a notice indicating

that they have been altered from the originals.